The recent total war bombardment of Brian Krebs’ site, and the subsequent allegation that the traffic emanated from compromised home routers, cameras, baby monitors, doorbells, thermostats, and whatnot, got me thinking.

Prolexic said the 665 Gbps attack that hit my site tonight is almost twice the size of the largest attack they've seen previously.

— briankrebs (@briankrebs) September 21, 2016

So DDoS is a thing, and as much as I enjoy the lampooning of IoT by everyone’s favourite wat account, @internetofshit, I wonder if the status quo of insecure consumer devices will have an unexpected knock-on effect.

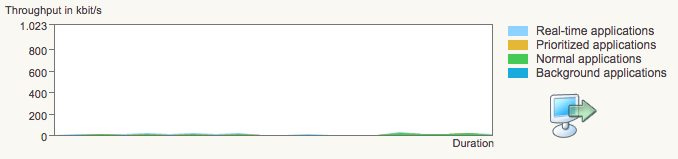

Previously, DDoS traffic was assumed to come from compromised servers (waves hand in the approximate direction of the cloud) or malware infected PCs. For the former, cloud providers have gotten pretty good at rooting out insecure hosts and booting them off their networks, and organised crime have pretty much figured out that deleting stuff of people’s home computer is less profitable than encrypting said stuff and holding it for ransom. There’s always the chance of someone spotting unexpected outbound traffic from a box — that is one thing the host based AV industry does seem to be good at — because there’s usually a human sitting in front of the device whenever it’s awake. But not so with the cable router or ADSL modem sitting under the hall table, or the IP connected baby monitor you installed in the nursery, or the hundreds of other IP connected whatevers produced for the lowest possible cost because that is what we, as consumers, demand; price, the ultimate arbiter of quality.

My home router. What’s it doing? I’ve got no idea.

Homes filled with tiny linux boxes running weak software are a tempting target. Not just because owning them is easy, but as long as the devices continue to work as reliably as they did before compromise, nobody is going to suspect that their excess capacity is being soaked up under someone else’s control. Embedded devices have other attractive properties, they’re usually online 24/7, not sporadically like a laptop trying to conserve battery power, and commonly enjoy a wired ethernet connection, not the whims of a rapidly changing WiFi network. Can any of you reading this post tell me that you know the provenance of every packet that leaves your home network?

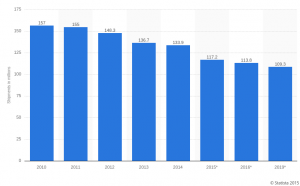

But back to Krebs and the IP cameras. With the nose dive in desktop and laptop sales, it’s pretty clear that the botnet action has moved to the embedded space. Assuming the attribution is correct, then DDoS has gone from being manageable to very not manageable, quickly. It’s the early 2000’s spam wars all over again, and companies that make real money on the internet are going expect a solution. And when I say solution, I mean litigation.

Who’s to blame for shitty insecure consumer devices? Who’s going to receive the summons?

Will it be the local ISPs, or carriers? Unlikely. In the States, carriers enjoy common carrier status which indemnifies them from crimes committed using their service. In Australia the situation is less clear but the message isn’t. ISPs are not interested in policing the behaviour of the users of that service. If you’re Walmart who’s been forced offline by 1Tbps of traffic during the holiday sales, you can forget about suing ISPs.

Ok, what about the device manufacturers themselves? I’ll be honest, I don’t have the stamina to read the EULA paperwork that comes with a device so I cannot assert this as a fact, but I would be amazed if the liability for the damage the device did, if not expressly waived by opening the box, exceeded the purchase price. Looking towards other industries, car manufacturers are not liable for the damage their vehicles do in the case of misuse, which is why in many parts of the world licensing a vehicle to drive on a public road requires compulsory third party insurance.

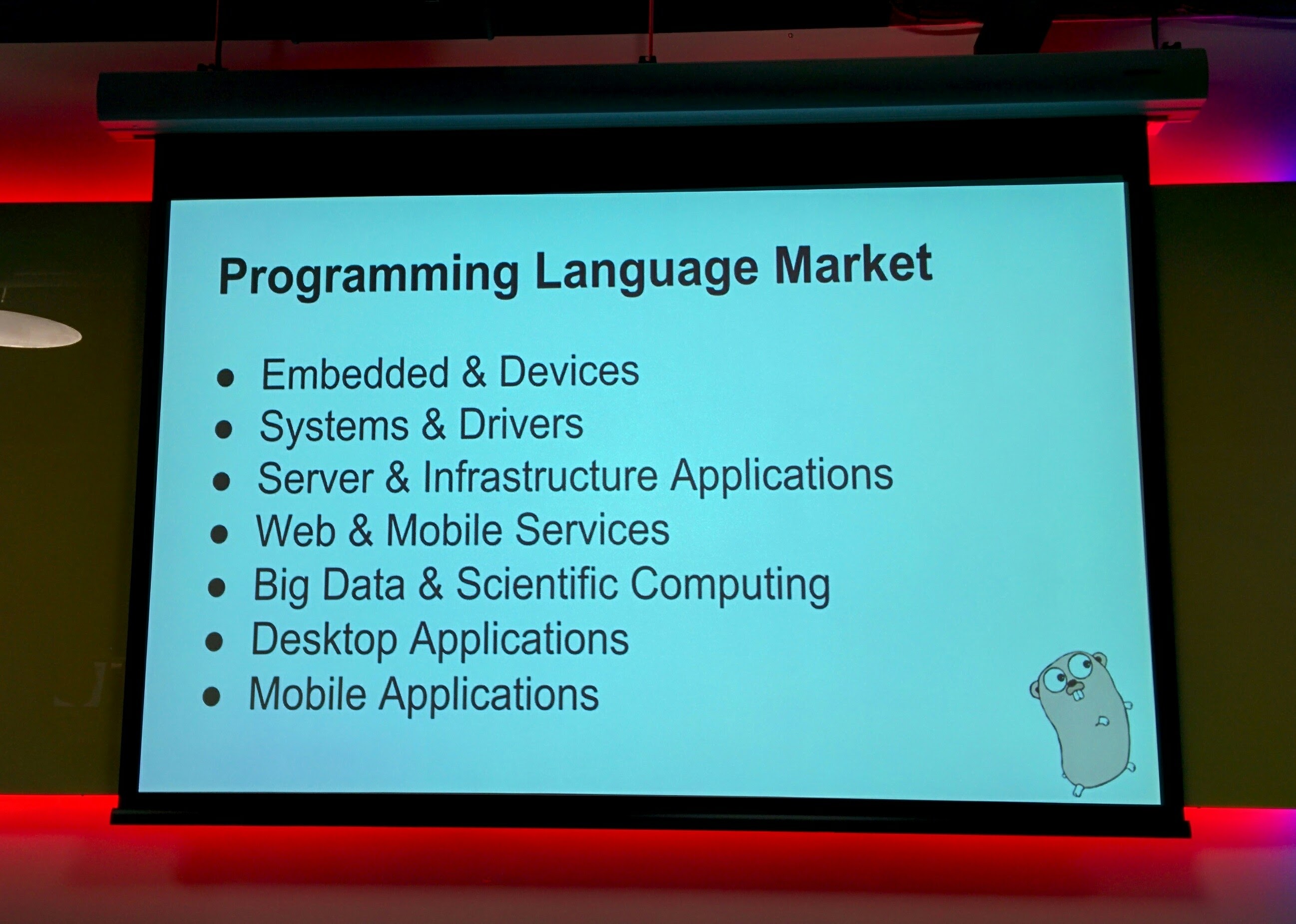

Let’s cut to the chase, the reason IoT DDoS is a thing is because the security of the software inside those devices is laughable. You can debate about why this is why it is, but that does not change the fact that all the other members of this supply chain have deftly sidestepped the buck on this one, and so the liability for insecure software rests with us, the authors of said software. Because, as Robert C. Martin likes to remind us, software rules the world, so programmers rule the world.

Serious stuff.

If you look at the history of unsafe products; food, paint, hair spray, electrical goods, governments have forced manufacturers to improve with a combination of regulations and import controls. In Australia, for example, it is illegal to import a product that connects to the mains supply unless its plug has the correct shroud over the live and neutral pins. This is how governments work, they cut off a manufacturer’s air supply by forbidding them from importing their products into the country. That tends to effect change, smartly.

Will IoT be the tipping point that forces the software industry to adopt voluntary, or mandatory, regulation or procedural standardisation?