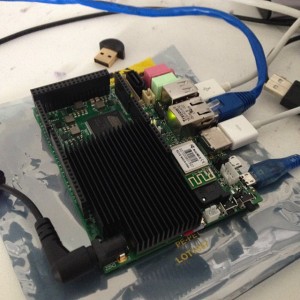

This is a quick post explaining how to install Ubuntu 12.04 on your Udoo Quad board (I’m sure the instructions can be easily adapted to the Dual unit as well).

The Udoo folks have made available two distributions, Linaro Ubuntu 11.10 and Android 4.22. The supplied Linaro distribution is very good, running what looks like Gnome 3 and comes with Chrome and Arduino IDE installed to get you started. If you want to just enjoy your Udoo then you could do a lot worse than sticking with the default distro.

Ubuntu Core

Canonical makes a very small version of Ubuntu called Ubuntu Core. Core is, as its name suggests, the bare minimum required to boot the OS.

As luck would have it there is a version for 12.04 for armhf so I thought I would see if I could get Ubuntu Core 12.04 running on the Udoo. There weren’t a lot of docs on the boot sequence for the Udoo, but I had enough experience with other Uboot based systems to figure out that Uboot is written directly to the start of the card, which then mounts your root partition and looks for the kernel there.

The first step is to download and dd the Udoo supplied image to a micro sd card and boot it up. I then did the same to a second micro sd card and connected that to the Udoo with a USB card reader.

There are probably ways to avoid having to use two cards, but as Ubuntu Core is so minimal it helps to have a running arm system that you can chroot to the Ubuntu 12.04 image and apt-get some more packages that will make it easier to work with (ie, there is no editor installed in the base Ubuntu Core image).

Once the Udoo is booted up using the Linaro 11.10 image, perform the following steps as root.

Erase the root partition on the target sdcard and mount it.

# mkfs.ext3 -L udoo_linux /dev/sda1 # mount /dev/sda1 /mnt

Unpack the Ubuntu Core distro onto the target

# curl http://cdimage.ubuntu.com/ubuntu-core/releases/12.04/release/ubuntu-core-12.04.3-core-armhf.tar.gz | tar -xz -C /mnt

Next you need to copy the kernel and modules from the Linaro 11.10 image

# cp -rp /boot/uImage /mnt/boot/ # cp -rp /lib/modules/* /mnt/lib/modules/

At this point the new Ubuntu 12.04 image is ready to go, but it is a very spartan environment so I recommend the following steps

# chroot /mnt # adduser $SOMEUSER # adduser $SOMEUSER adm # adduser $SOMEUSER sudo # apt-get update # apt-get install sudo vim-tiny net-tools # and any other packages you want

Fixing the console

The Udoo serial port operates at 115200 baud, but by default the Ubuntu Core image is not configured to take over on /dev/console at the correct baud. The simplest solution to fix this is

# cp /etc/init/tty1.conf /etc/init/console.conf

Edit /etc/init/console and change the last line to

exec /sbin/getty -8 115200 console

And that is it, exit your chroot

# exit

Unmount your 12.04 partition

# umount /mnt # sync

Shutdown your Udoo, take out the Linaro 11.10 image, insert your 12.04 image and hit the power button. If everything worked you should see a login prompt on the screen, if you have connected a HDMI monitor, and the serial port if you’ve connected the port up (recommended).

After that, login as the user you added above, sudo to root and finish your setup of the host.

Oh, and in case you were wondering, early Go benchmarks put this board about 20% faster than my old Pandaboard Dual A9 and 10% faster than my recently acquired Cubieboard A7 powered hardware.

Oh, and in case you were wondering, early Go benchmarks put this board about 20% faster than my old Pandaboard Dual A9 and 10% faster than my recently acquired Cubieboard A7 powered hardware.

One feature I really appreciate is the onboard serial (UART) to USB adapter which means you can get access to the serial console on the Udoo with nothing more than a USB A to Micro B cable.