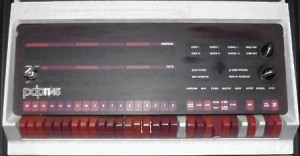

This is a post about the performance of my avr11 simulator. Specifically about the performance improvements I’ve made since my first post, and the surprises I’ve encountered during the process.

Old school profiling

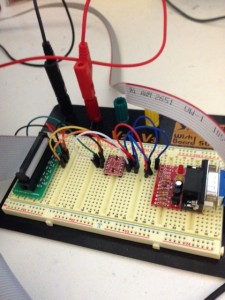

Because avr11 runs directly on the Atmega 2560 microcontroller, there is no simple way to measure the performance of various pieces of code externally.

I am aware that Atmel studio contains a fairly accurate simulator, but that package only runs on Windows. It also wasn’t clear if it can simulate the microSD card and xmem boards that avr11 requires. That left me needing to improvise some way of measuring the relative performance of avr11 while I made changes to the code.

The solution I came up with was a counter that increments every time cpu::step(), the function that processes one instruction, is called. The counter is defined as uint16_t so rolls over every 2^16 instructions. Combined with the built in millis() function, which prints the number of milliseconds since reset, I had a crude way of timing how long avr11 takes to dispatch instructions.

cpu::step();

if (INSTR_TIMING && (++instcounter == 0)) {

Serial.println(millis());

}

From there the process became very iterative. Each evening I would spend a few hours playing with various tweaks in the Go version of avr11, then I would transpose them over to the C++ code a piece at a time, testing as I went and watching the cycle count.

Some promising results

TL;DR – Instruction dispatch was an average of 144 microseconds with the mmu disabled1, 160 with the mmu enabled. It is now 60 microseconds with the mmu disabled, and a few microseconds more with the mmu enabled. 2.4x improvement.

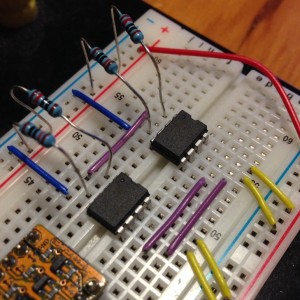

The first big improvement came from switching from the Arduino SD classes to the SdFat library. SdFat gives you more control over the interactions with the card, and also lets you set the speed of the SPI bus, on 16 Mhz Atmels, to full speed, rather then the previous 1/2 speed maximum. This gave me an 8-10% improvement in memory access times to the SPI SRAM shield.

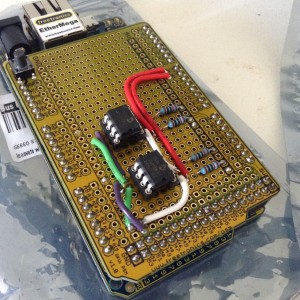

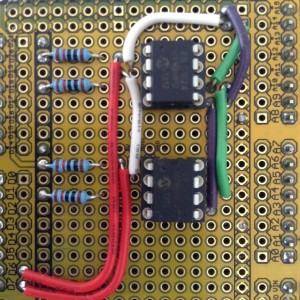

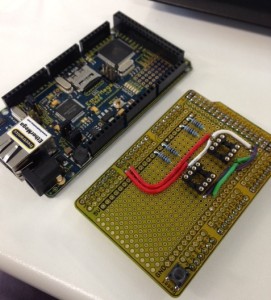

The next big improvement came from switching from the SPI SRAM shield to a Rugged Circuits’ QuadRAM board. This eliminates the SPI bus entirely by extending the address space of the Atmega 2560 to the full 64 kilobytes and adding banking to access up to 512 kilobytes. This gave another 20% improvement.

After that things got harder. The remaining 30 microsecond improvements came from careful rewriting of all the hot paths and reducing the data types involved to their smallest possible type.

A surprising discovery

The most surprising discovery of all was made as I started to comment out pieces of the code to get a baseline for the inner loop of the simulator.

After whittling it down to simply fetching the instruction at the current PC I’d arrived at a baseline of 21 microseconds. That is just under 50 kilohertz simulated performance; not great, especially considering this isn’t processing the instruction.

Digging a little further I discovered that this one shift to set the correct memory bank costs 4-5 microseconds. Out of a total time of 21 microseconds, that is close to 25% in just one line.

if (a < 0760000 ) {

// a >> 15 costs nearly 5 usec !!

uint8_t bank = a >> 15;

xmem::setMemoryBank(bank, false);

return intptr[(a & 0x7fff) >> 1];

}

In retrospect this shouldn’t have been a surprise. The Atmega processor is an 8 bit processor. It has some provisions for 16 bit quantities, but they are expensive. 32 bit quantities probably receive no hardware support, and I think in this instance avr-gcc is calling a helper function for the unaligned shift.

A quick hack using a cast and some shifts shaved 4 microseconds off the inner loop, clearly attributable to this inefficient shift. The proper fix will probably involve more radical surgery using a union datatype.

Conclusion

If this post has a moral, it would have to be

Don’t guess, always profile your code.

As for the performance of avr11, it stands a 16 kilohertz simulated clock speed. Possibly with some extreme C surgery this can be improved to 20 kilohertz. Past that, the possibilities running on the Atmega 2560 look grim.

I’d be interested in hearing from other Atmel users about their profiling methods.

- The PDP-11/40 I am simulating has an 18 bit address space. However the CPU is only 16 bit and cannot directly generate 18 bit addresses so a memory management unit is used to rewrite addresses as they leave for the UNIBUS. The MMU adds a small overhead to memory reads and writes when enabled. In the original hardware that was somewhere on the order of 90 nanoseconds. In simulation it’s probably under 5 microseconds.