In October this year I had the privilege of speaking at the GothamGo conference in New York City. As I talk quite softly, and there were a few problems with the recording, I decided to write up my slide notes and present them here.

If you want to see the video of this presentation, you can find it on youtube.

The Legacy of Go

I want to open with a question — How will Go be remembered ?

In posing this question I do not wish to appear morbid, or to suggest that Go’s best days are already behind it. Rather, in the spirit of this conference’s theme, I want to speculate on what will be the biggest mark Go will leave on our profession.

So to restate the question — What will be the legacy of Go ?

To set the stage to answer this question, let’s look at some historical examples.

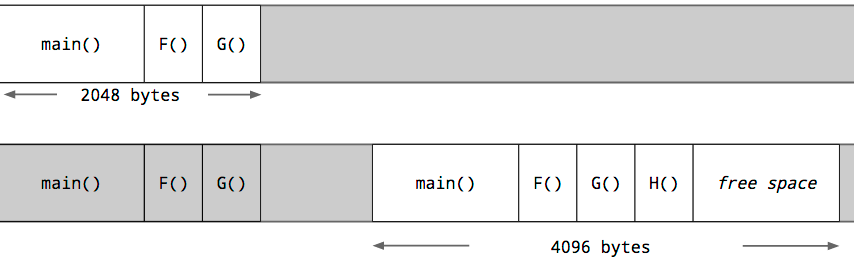

C

C, the progenitor of an entire family of curly brace languages. If you were to briefly summarise C’s legacy, how would you describe it ?

C, the progenitor of an entire family of curly brace languages. If you were to briefly summarise C’s legacy, how would you describe it ?

One of my favourite descriptions of C is a portable high level assembly language. C certainly wasn’t the first high level language, but it was one of the first to take portability seriously. Additionally C has the distinction of being the language used to write the operating system of every computer in this room, and probably many of the micro controllers as well.

Before C there was assembly language, and there is no indication that we are in the period that could be classified as after C.

This is C’s legacy.

C++

Let’s try another. How would you describe C++’s legacy ?

Let’s try another. How would you describe C++’s legacy ?

I think for many C++ is considered the Rolls Royce of mainstream object orientation.

C++ also codified the ideas of zero cost abstractions. In fact, the only abstractions C++ developers prefer to use are the ones that come with no cost, assuming of course that you don’t consider compile time a cost.

Ruby on Rails

For my third example, something a little different. Maybe you haven’t used Ruby on Rails, but I’d wager you’ve probably heard of it. How would you describe the legacy of Ruby on Rails ?

For my third example, something a little different. Maybe you haven’t used Ruby on Rails, but I’d wager you’ve probably heard of it. How would you describe the legacy of Ruby on Rails ?

My take away from Rails was the standardised project layout. Every Rails project had models in the models directory, controllers in the controllers directory, and views in the views directory. This was Rails’ mantra of convention over configuration

Compare this to the previous generation of web frameworks, all different in ways that are at best described as belligerent.

Now, post Rails, all web frameworks look alike. Words like routing, controllers, middleware, assets, are codified in our lexicon because of Rails. This is the sort of legacy I’m talking about.

So, now I’ve established a framework, let’s turn to the conference’s namesake.

A simple programming language

At the start of the year I had the opportunity to give a talk in India entitled Simplicity and Collaboration. As I was lucky enough to be giving the closing keynote this gave me an opportunity to try a real table thumping call to action.

I mean, who doesn’t want to be simple ? And what better way to frame a debate as simple; good, complexity; obviously bad. Could we say then that simplicity will be Go’s lasting legacy ?

Perhaps, but perhaps our frame of reference is a little skewed. In preparing this talk, I found numerous anecdotes from language designers, who, reflecting back on their own achievements, lamented complexity’s siren song.

Perhaps, but perhaps our frame of reference is a little skewed. In preparing this talk, I found numerous anecdotes from language designers, who, reflecting back on their own achievements, lamented complexity’s siren song.

Some argued that the solution to complexity was abstraction, others felt abstraction itself was the root of the problem. However, unanimously these luminaries believed that complexity must be avoided, and cautioned others to strive for simplicity in their designs.

Is Go is the language which delivers this long sought after promise ? I think it’s probably too early to say. The signs are positive, but this is probably not going to be the thing that I believe people will remember most about Go.

Fitness for purpose

Go will certainly not be remembered as an academic language, it breaks only the minimum of new ground, preferring instead to consolidate on a corpus of proven ideas.

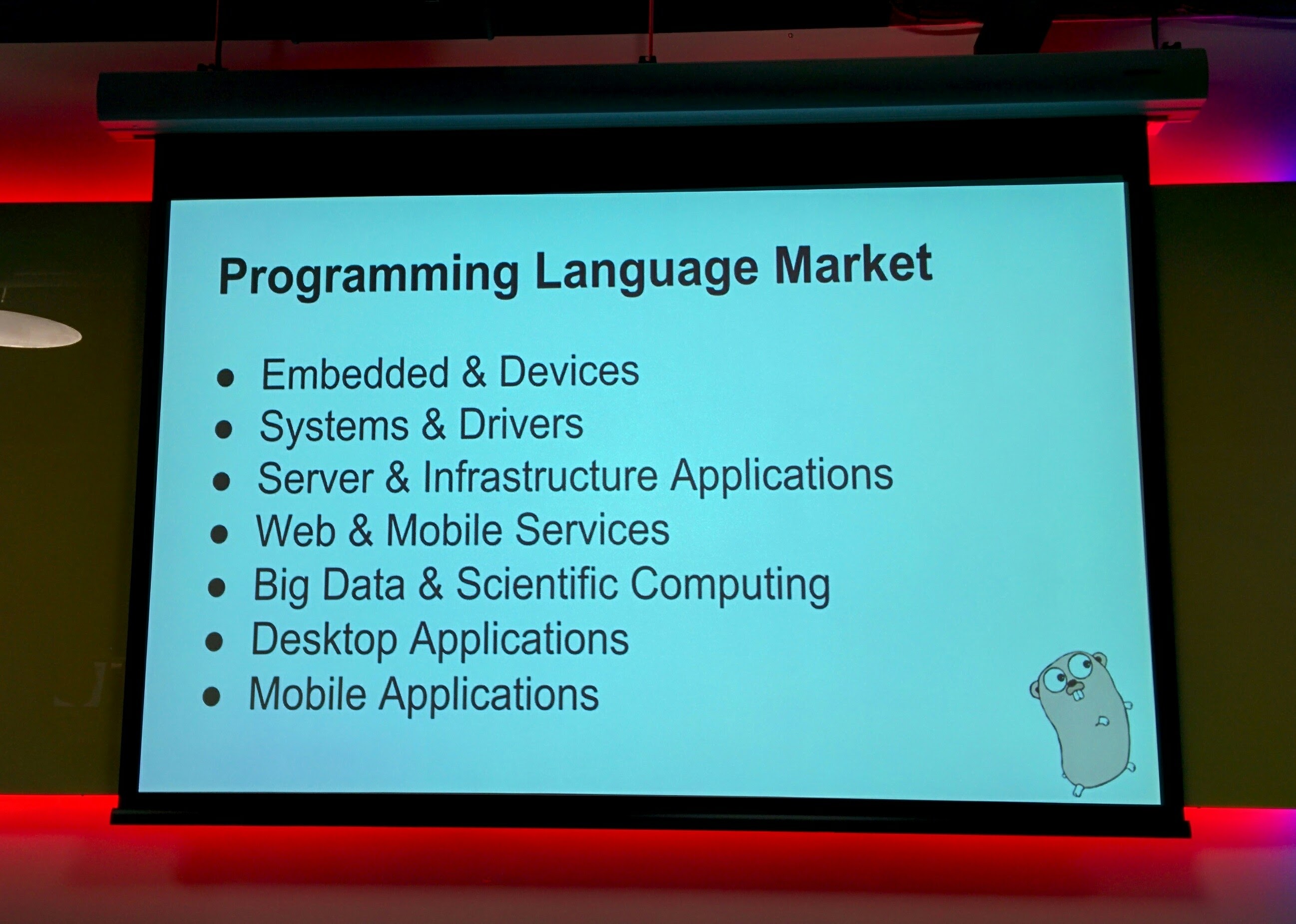

One aspect which is contributing to our language’s success is what I term its fitness for purpose.

As Rob Pike wrote in 2012, Go is a language designed to integrate with an existing software development ecosystem. I believe Go’s popularity is due in large part to the care its designers gave to every aspect of the language’s interaction with the complete software development life cycle.

But sadly, this is not what I believe what people will remember our language for, because few outside the Go community appear to appreciate the holistic nature of the Go’s design.

However, in discussing the motivations that drove the design of the language, we see a clue to its possible legacy.

Tooling

I think it is the tooling that has grown, not in spite of the language, but in deliberate symbiosis, which deserves recognition.

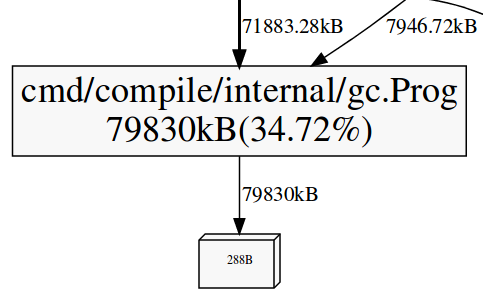

In his opening keynote at Gophercon this year Russ Cox spoke about the need for mechanical refactoring and code generation to be indistinguishable from code written by a human. In particular I feel go fmt deserves most of the credit here. While more powerful translations are possible, the low barrier of entry to using gofmt has ensured its ubiquity.

It’s not enough that the code is well formatted according to local custom, but instead that there is precisely one way Go code should be formatted. This cannot be understated.

The result is nowadays all Go code is go formatted, and the little which is not is viewed with deep suspicion.

Just as no web framework will call a controller something different, I believe that no future language will be considered complete without a canonical style, and a tool to enforce it.

Pop Culture

Earlier this year my colleague Katherine Cox-Buday gave a talk at Gophercon where she built upon a conjecture by Alan Kay to illustrate some concerning dogma that she observed amongst Go developers.

Earlier this year my colleague Katherine Cox-Buday gave a talk at Gophercon where she built upon a conjecture by Alan Kay to illustrate some concerning dogma that she observed amongst Go developers.

I enjoyed this talk very much, especially Kay’s rebuttal that our profession is not a science but a pop culture.

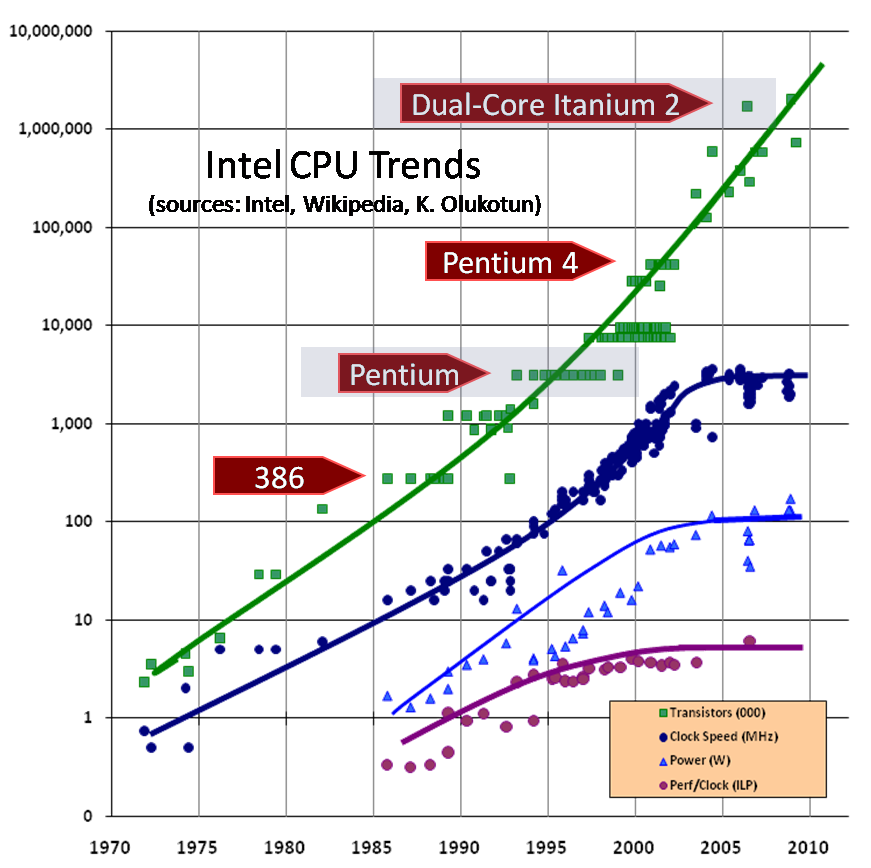

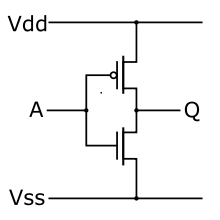

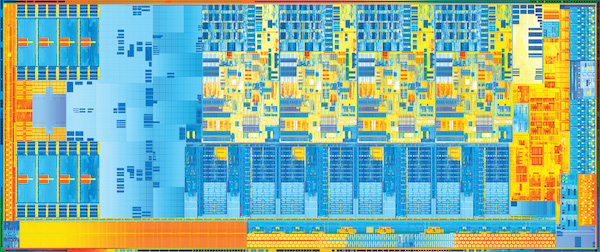

We live in what has been described as the information age. An age of digitisation. An age of the transistor and the computer. These are pervasive forces reshaping our society. Thus I think it is impossible to separate the role of those who program, from the impact of the computer itself.

Accepting Kay’s critique of our industry, pop culture is responsible for some of the most iconic ideas in our society, and in answering the question of Go’s own legacy, it makes good sense to investigate the role of the programmer with a wider social context.

To explain what I mean, permit me to digress for a moment.

Denim, sunglasses and portable music

In 1870 Jacob Davis, a Californian tailor, was asked by one of his customers to create a hard wearing pair of trousers for her husband.

By reinforcing the weak points on the seams and pockets with copper rivets, Davis created a durable garment that became an overnight sensation.

By reinforcing the weak points on the seams and pockets with copper rivets, Davis created a durable garment that became an overnight sensation.

Within a year he was unable to keep up with demand and approached his supplier of denim, one Levi Strauss, for financial support in patenting his design.

Today we know them simply as Levi jeans. Although Davis’ signature rivet was later removed over concerns that it was damaging the furniture, the signature Levi 501 became a cultural icon.

A symbol of rugged individualism, and smart-casual iconoclasm.

In 1936, Bausch and Lomb, a medical instrument company from Rochester, working under contract for the US army, produced a pair of sunglasses designed to aid pilots suffering from eye strain and migraines.

Initially called the “anti-glare”, but renamed a year later when the eyewear division was spun out into a subsidiary, the “ray ban” company, we now know them, released their signature Ray Ban 3025 Aviator.

With the help of strong promotional efforts, Ray Ban’s Aviators became synonymous with action and adventure. The hero, and the maverick.

In 1979, the Sony Corporation released the TPS-L2. Better known in most markets as the Walkman, Sony had created a new genre of music consumption.

The walkman arrived at the perfect time to ride a wave of lifestyle advertising, allowing everyone to be a music enthusiast, not just the audiophile shut in.

Sony effectively created the idea of personal music, liberating it from the tyranny of the radio disc jockeys, and making it private, personal, and portable. But Sony’s hubris, and a misplaced focus on the music producers, not consumers, caused them to stumble with the mini disc. On that last point, I’ll leave you to draw your own parallels with the software industry.

These are three unrelated tales which weave a story of cultural memes in our society.

Aviator sunglasses, created for a utilitarian purpose have continued to represent an heroic, self confident, ideal. The same could be said for denim jeans. Both have continued to be remixed and riffed on in a way that would have pleased Warhol.

We see similar patterns in language design. Languages are not immune to the whim of popular fashion, unless of course they are Lisp, the perennial tie dye of languages.

A new language has to be sufficiently different

A new language, to be successful, has to be sufficiently different. Being only a minor improvement on a theme makes it too hard to capture mindshare.

Go epitomises this, it is a language that is heavily informed by the past, and at the same time, different enough to justify overcoming the opportunity cost of change.

A trend toward static typing

Types, like denim jeans, are the mainstay of our industry. Types have been decomposed, algebratised, templated, rejected, inferred, pushed to the side, then rediscovered. Types, like kitschy sunglasses or ripped jeans, may go out of style for a time, but never for very long.

Go sits atop the crest of a number of popular waves, one of these is a movement towards static type checking which other languages are now retrofitting. Retrofitting, not for performance, mind you — type inference has pretty much solved that problem, but instead for the productivity of their programmers.

But, I think it is unlikely that Go will be the poster child of this movement, it is merely a participant, and few medals are given out for simply being present.

Interfaces

For me, it is Go’s interfaces, which represent a refinement of C++’s virtual base classes, an evolution through Java’s interface type, and have reached a point where they are divorced completely from both value and hierarchy.

Interfaces represent pure behaviour. In some ways Go’s interfaces are closer to Smalltalk’s vision of objects; defined not by class membership, but instead by behaviour.

I believe interfaces are the iconic feature of Go. They represent a refinement of many previous attempts but are themselves unique among mainstream languages.

So that’s two possible ideas for the legacy of Go, I hope you will indulge me one more.

The things Go took away

In his essay The last programming language, Robert C. Martin asks:

Are languages successful because they offer programmers more choice, or are they successful for the opposite reason, they remove choice ?

And while I’ll have to disagree with Martin’s conclusion that Clojure represents the last programming language, he does make a compelling argument.

In hindsight, the programming languages which have been successful, which have been remembered, and which have established a legacy, are the languages which have successfully removed a commonly accepted tenet of the programming establishment.

Languages like FORTRAN and COBOL removed the requirement to program directly in assembly language, despite howls that efficient programs could never be written in a high level language.

A decade later, languages like Pascal, PL/I and Algol removed direct transfer of control, replacing it with the pillars of structured programming; sequence, selection and iteration, and they did so to cries that a real programmer would have goto prised from their cold dead fingers.

Can we find supporting evidence of Go’s legacy in the things that it chose to remove ?

Inheritance

I think a strong contender could be a lack of inheritance; Go took away subtypes. Everyone knows composition is more powerful than inheritance, Go just makes this non optional.

In the cacophony of hand wringing over a lack of templated types, nobody seems to be complaining that a lack of inheritance is hurting their ability to write programs in Go. It seems that nobody missed inheritance much after all.

However it also seems to me that people are not going to remember Go for taking away something that they never missed in the first place.

Semicolons;

Go is a curly braced, block structured language, but with a cute trick of the lexer. The semicolons are still there, we just hide them from the author. But, this is also not a new trick. Javascript made semicolons optional, and sometimes it works.

Go’s implementation is also not an original idea, it was taken directly from Martin Richard’s BCPL.

So while Go finally removed semicolons, I don’t think it can claim credit for the idea.

Threads

In my mind, of all the possible candidates that Go has removed, it is the removal of threads that will be its most profound contribution.

This is not to say that Go programs do not use threads, any more than you can say structured programs are not compiled into branch and jump instructions.

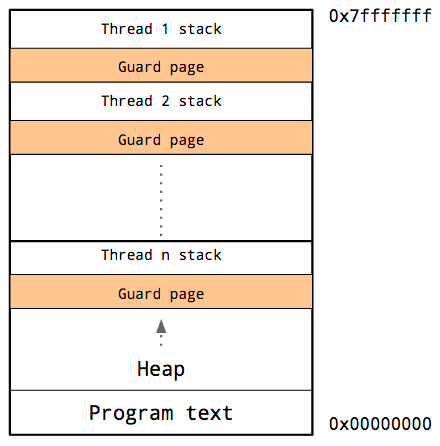

But Go programmers no longer have to concern themselves with thread management, or as Uncle Bob would say, Go programmers are restricted from directly controlling the thread their code runs on.

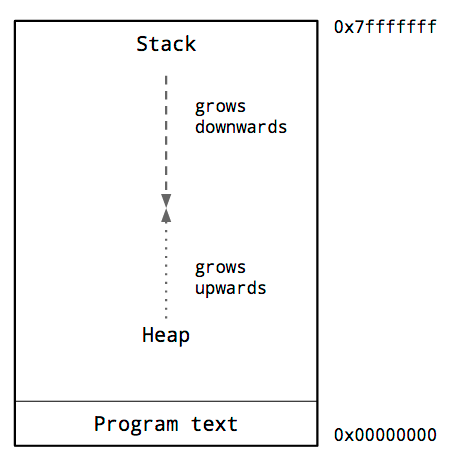

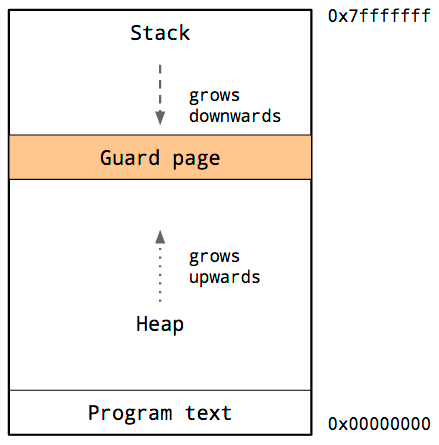

Co-incidentally, the removal of threads from the Go programmers’ model means the removal of a requirement to care about the stack, unlocking the much older technique of recursion as an alternative to state machines or mutable state.

Goroutines are cheap, so cheap that we can structure our programs in a straightforward imperative fashion without having to worry about the overhead of one operating system thread per goroutine.

Go took away many things, but in removing threads and a need to care about the stack, replacing them instead with a more coherent idea of goroutines and channels, I think it has made its most powerful mark.

gofmt, interfaces, goroutines

To recap, go fmt, interfaces, and goroutines. Some of these are new ideas, others are not.

The goal of this presentation was not to identify three new innovations in Go, but rather to attempt to predict how a future generation of programmers will look back upon Go’s legacy.

Go is still young, with a long productive life ahead of it, and that means that almost all of the Go code that will be written, has yet to be written.

Similarly, while the community of Go users is growing, compared to the number of people who will use Go during its lifetime, we are but a tiny fraction. Therefore we should optimise for this larger group of people who have yet to write any Go code.

This means more examples, more blog posts, and more books.

This means more education and more training — I think Go is a fantastic language to teach the theory and the practice of programming, and we’ve barely scratched the surface here.

This means more user groups, more conferences, and more diversity — there is so much more to be done here, and we should look to established language communities, like Python for example, for guidance.

And this is the part where you come in. This is the time for you to lean in. This is the time for you to get involved.

Because this is your opportunity to decide how you want Go to be remembered.

Thank you.