This is a short blog post about my thoughts on using Go in anger through several workplaces, as a developer and an advocate.

What is $GOPATH?

Back when Go was first announced we used Makefiles to compile Go code. These Makefiles referenced some shared logic stored in the Go distribution. This is where $GOROOT comes from.

Back then, if you wrote Go code, you’d probably also used these Makefiles, and while you could check out your source code anywhere, most people would put their own Go code in what today we’d call $GOROOT/src as you must’ve compiled Go from source, so this directory was always going to be present.

Towards the 1.0 release goinstall, then go get, solidified the use of domain names in import paths to provide a globally unique namespace. These tools introduced a new location into which Go code would be fetched. This location was separate from $GOROOT to make clear the distinction between code provided by the Go project, and code written by the developer. By the time Go 1.1 was released in 2013, $GOROOT was removed as a fallback option.

Why does $GOPATH exist?

$GOPATH exists for two main reasons:

- In Go, the

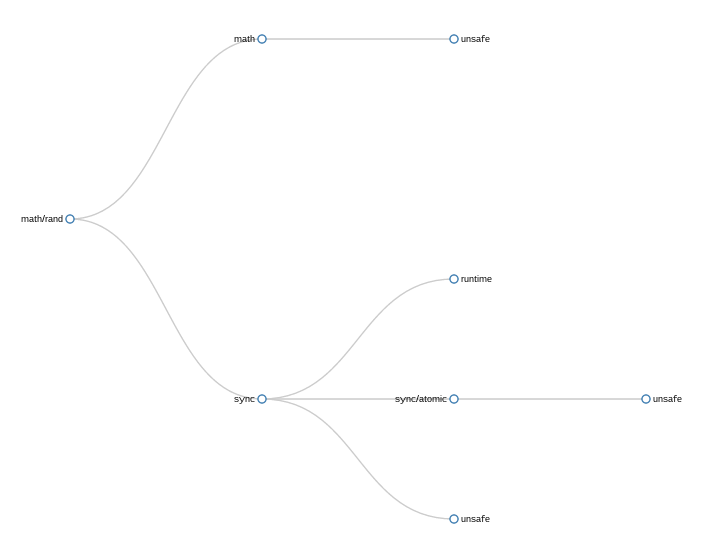

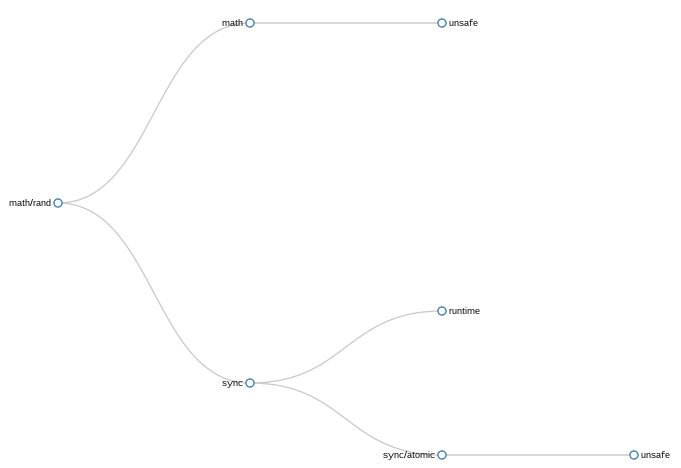

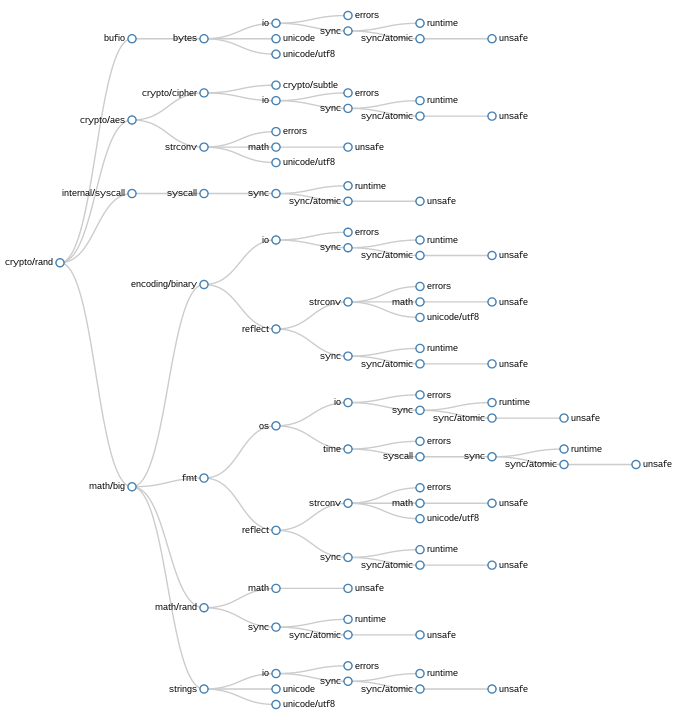

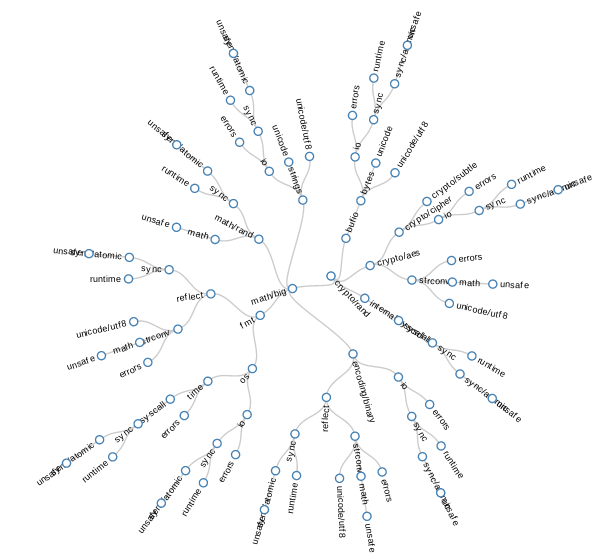

importdeclaration references a package via its fully qualified import path.$GOPATHexist so that from any directory inside$GOPATH/srcthe go tool can compute the absolute import path of the package in question.1 - A location to store dependencies fetched by

go get.

Having a per user $GOPATH environment variable also means developers could use the go tool from any directory on their system to build, test and install code, but I suspect only a minority utilise this feature.

What’s wrong with $GOPATH?

In my experience, many newcomers to Go are frustrated with the single workspace $GOPATH model. They are confused that $GOPATH doesn’t let them check out the source of a project in a directory of their choice like they are used to with other languages. Additionally, $GOPATH does not let the developer have more than one copy of a project (or its dependencies) checked out at the same time without having to update $GOPATH constantly.

I think it is important to recognise that these issues are legitimate points of confusion for many newcomers (including those on the Go team) and act as a drag on Go adoption. As we’re on the cusp of a blessed dependency management tool for Go, I think it’s equally important to continue to question the base assumptions that this new tool will build on, namely requiring a $GOPATH.

In my opinion, any Go build tool needs to provide (in addition to actually building and testing code) a way for Go code checked out in an arbitrary location on disk to recover its intended fully qualified import path; the path other code will import it as.

The $GOPATH model answers this question by subtracting the prefix of $GOPATH/src from the path to the directory of the current package; the remainder is the package’s fully qualified import path. This is why if you check out a package outside a $GOPATH workspace, the go tool cannot figure out the packages’ fully qualified import path and everything falls apart.

What are some alternatives to $GOPATH?

I attempted to address both issues with gb, which gives developers the ability to check out a project anywhere you want, but has no solution for libraries, and gb projects were not go gettable. However gb showed that writing a new build tool that did not wrap the go tool meant it was not forced to reorganise the world to fit into the $GOPATH model allowing gb users to include the source of all their dependencies in their project without the pitfalls of the Go 1.6’s vendor/ directory.

Recently, on a suggestion from Bill Kennedy, I built an experimental build tool that recorded the expected import prefix in a manifest file. That prefix, rather than one computed by $GOPATH directory arithmetic, is used to determine the fully qualified import path.

I’m working on a similar tool (unfinished) based on a suggestion from Brad Fitzpatrick that uses the .git directory as a sentinel to determine the root of the project and hopefully infer the full import path from the git remote configuration.

While these experiments are unfinished, both demonstrate that you can avoid the $GOPATH restrictions and retain compatibility with the go get ecosystem. Potentially in the case of Kodos, even avoid a manifest file.

Conclusion

Kang and Kodos use a lot of forked code from gb, which I hope to rectify over the new years’ break. If you are interesting in contributing or better yet, building your own Go tool to explore this problem space, Kang, Kodos, and gb are permissively licensed.

Notes:

- This is notably different from the way imports work in scripting languages like Python and Ruby, which use directly scanning and inserting onto a global search path source code directories.